Diffusion Model Manifold Learning

In the realm of machine learning, the advent of diffusion models represents a transformative juncture, woven intricately with the rich tapestry of manifold learning. As an interdisciplinary frontier, this confluence offers not merely a method for data representation, but also paves the way for a mood-boosting experience for researchers, practitioners, and hobbyists alike. The allure of harnessing complex data distributions to generate meaningful outputs is fundamentally inspiring. Let’s delve into the intricate labyrinth of diffusion models and their relationship with manifold learning, shedding light on their burgeoning significance.

Diffusion models have emerged as a formidable class of generative models, intricately designed to address the complexities inherent in data representation. Unlike traditional models, which often exhibit limitations in capturing the full spectrum of data distributions, diffusion models leverage stochastic processes. By simulating the gradual corrupting and restoring of data through noise injection, these models unfurl a unique capability: they can generate high-dimensional data points from low-dimensional manifolds. This elegance rests on the principle of learning the underlying structure of data distributions, much like navigating a multi-dimensional landscape.

At the heart of diffusion models lies the diffusion process itself, which can be likened to the gradual spread of particles within a medium. By meticulously transitioning through steps of adding and denoising, these models allow for an intricate interplay between noise and information. The iterative framework employed within diffusion models aligns seamlessly with manifold learning, wherein the manifold acts as a geometric structure that encapsulates the intrinsic properties of complex data sets. When researchers embark on this journey, they are not merely appealing to the analytical mind; they are, in essence, partaking in a mood-enhancing odyssey, exploring the vast potentials of the data’s geometric essence.

Manifold learning emerges as a pivotal aspect of this paradigm. It focuses on understanding data distributions residing within lower-dimensional spaces while recognizing that they may be embedded within higher-dimensional contexts. The manifold serves as a denotation for a continuum of data points that share similar characteristics, offering a compact representation that is both efficient and rich in information. It is within this framework that diffusion models play a consequential role. Their capacity to navigate and decipher the manifold dynamics enables practitioners to derive profound insights from seemingly convoluted data sets.

The integration of diffusion models with manifold learning introduces an expansive canvas upon which various applications can unfold. In computer vision, for instance, the marriage of these methodologies has revolutionized image generation and manipulation. By generating realistic images from latent representations, developers harness the power of diffusion models while grounding their outputs in the manifold spaces defined by high-dimensional image data. This synergy not only enhances the quality of generated images but also cultivates a deeper understanding of visual data, resulting in a euphoric fusion of creativity and technological prowess.

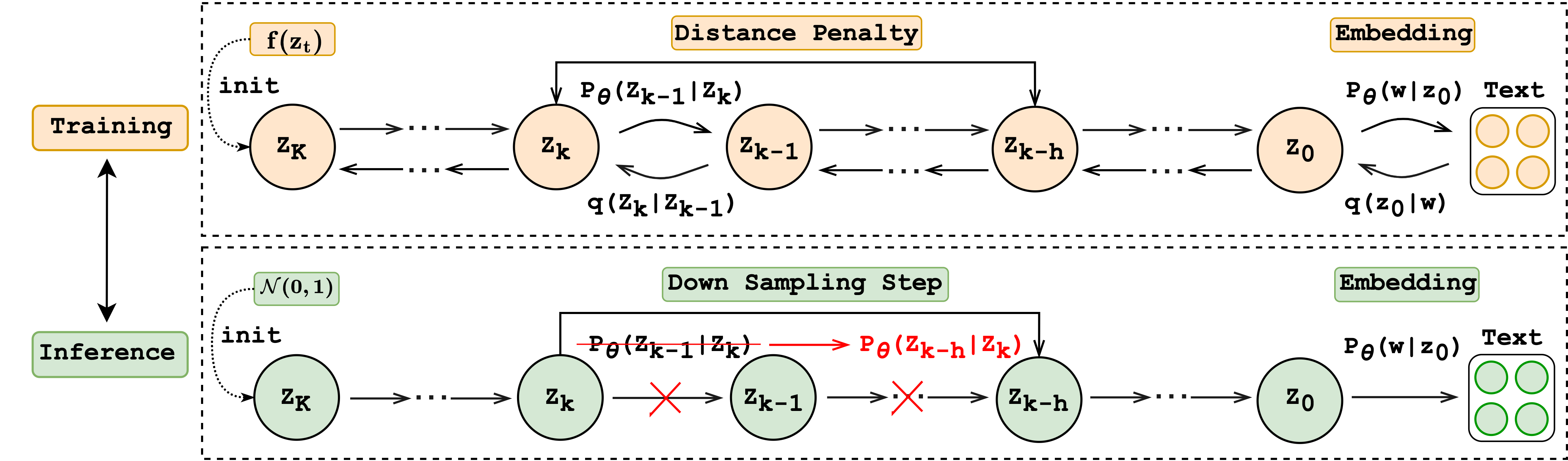

Moreover, the impact of diffusion models transcends beyond the confines of vision applications. In natural language processing, for example, the utilization of diffusion processes can facilitate the generation of coherent text. By embedding textual data within manifold structures, these models can produce creative narratives that resonate emotionally with readers, thereby fostering a mood-boosting experience for audiences. Picture an AI that understands not just the syntax of language, but the nuanced emotions that words can evoke. This is the future that diffusion models beckon, where the lines between machine-generated and human-like creativity blur, engendering an enlightening experience.

As one explores the intricacies of diffusion models and manifold learning, the implications for healthcare and bioinformatics become increasingly vivid. The ability to model complex biological data sets—such as genomic sequences or protein structures—using these advanced methodologies heralds a new era of precision medicine. By elucidating the manifold structures that underpin biological phenomena, researchers can discover novel therapeutic targets and devise personalized treatment strategies. The potential to induce a paradigm shift in healthcare fosters an optimistic outlook; a sentiment that permeates the halls of research institutions dedicated to such discovery.

Additionally, the value of diffusion model manifold learning is underscored in its applicability within finance and econometrics. Financial datasets are often rife with non-linear relationships and intricate patterns. By employing diffusion processes in conjunction with manifold learning techniques, analysts can unveil hidden trends, improving forecasting accuracy and risk assessment. This strategic advantage imbues stakeholders with confidence, ultimately propelling the sector toward a positive trajectory amidst uncertainty.

To sum up, the intricate interplay between diffusion models and manifold learning serves as a potent reminder of the capabilities and opportunities presented by modern machine learning methodologies. Their intersection not only elucidates complex data relationships but also illuminates pathways toward innovative applications across diverse fields. As researchers and practitioners continue to explore this uncharted territory, they are not only refining the tools of their trades but are also embarking on a captivating journey that elevates mood, creativity, and the insatiable quest for knowledge. The future holds profound promise, one where the synergy of these methodologies propels us into realms of discovery previously thought unattainable.

If you are searching about Can Diffusion Model Achieve Better Performance in Text Generation you’ve visit to the right page. We have 10 Images about Can Diffusion Model Achieve Better Performance in Text Generation like Learning Structure-Guided Diffusion Model for 2D Human Pose Estimation, Modulating Pretrained Diffusion Models for Multimodal Image Synthesis and also Introduction to Diffusion Models for Machine Learning. Read more:

Can Diffusion Model Achieve Better Performance In Text Generation

paperswithcode.com### GitHub - Ruslanmv/Diffusion-Models-in-Machine-Learning: Simple

paperswithcode.com### GitHub - Ruslanmv/Diffusion-Models-in-Machine-Learning: Simple

github.com### Introduction To Diffusion Models For Machine Learning

www.assemblyai.com### How Diffusion Models Work - DeepLearning.AI

www.assemblyai.com### How Diffusion Models Work - DeepLearning.AI

www.deeplearning.ai### What Is Diffusion Model In Deep Learning?

www.deeplearning.ai### What Is Diffusion Model In Deep Learning?

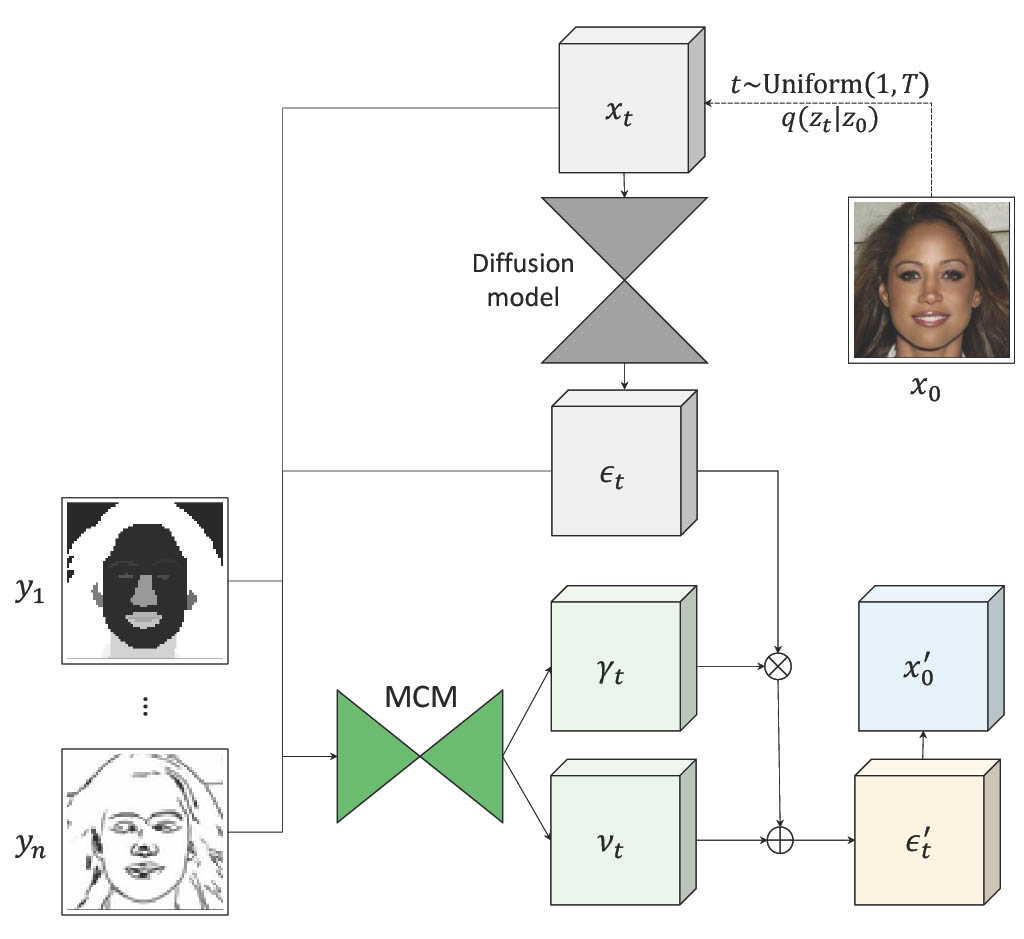

www.rebellionresearch.com### Modulating Pretrained Diffusion Models For Multimodal Image Synthesis

www.rebellionresearch.com### Modulating Pretrained Diffusion Models For Multimodal Image Synthesis

mcm-diffusion.github.io### The Panoramic Guide To Diffusion Models In Machine Learning

mcm-diffusion.github.io### The Panoramic Guide To Diffusion Models In Machine Learning

shelf.io### How Diffusion Models Work - DeepLearning.AI

shelf.io### How Diffusion Models Work - DeepLearning.AI

www.deeplearning.ai### Diffusion Model As Representation Learner | DeepAI

www.deeplearning.ai### Diffusion Model As Representation Learner | DeepAI

deepai.org### Learning Structure-Guided Diffusion Model For 2D Human Pose Estimation

deepai.org### Learning Structure-Guided Diffusion Model For 2D Human Pose Estimation